An exploration of GPT-3's knowledge of Minetest modding, with node registration, formspec manipulation, mod creation, and Minecraft mod to Minetest conversion.

Read it on blog.rubenwardy.com

I'll repost what I replied to someone else:

It's about as good as a beginner modder.

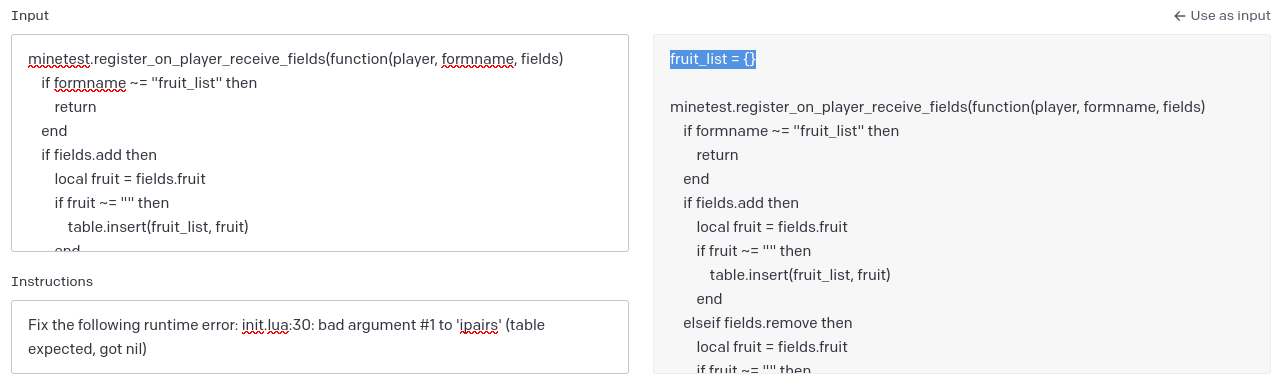

If you look in the formspec section, it generates complete code for a problem that almost works - just has one missing variable. If you feed it the error message, then it is able to fix the issue - just like a real programmer does

So if you could combine this tool with a way of testing its results then it will be very capable

Remember that this is an early generation of the tool as well. I fully expect subsequent tools to be much more accurate.

Also, this tool was never trained especially for lua and Minetest. Codex only really officially supports python, js, and a few other languages. So having it trained especially will make the results better

This sounds quite problematic.ruben's atricle wrote: GPT-3 (and Codex) learned how to write Minetest code by reading code on the Internet. This code may or may not be open source, and may or may not be permissively licensed. These models tend to regurgitate code, which leads to license laundering - open source code being turned into proprietary code, without credit. These products benefit from the unpaid labour of the open-source community. So whilst this technology is interesting, I’m not sure how much I agree with it ethically.

Correct it won't have the Windows kernel space code and most the other parts of their OS, but it will have most of the .NET Framework, since most of that is now open-sourced especially with .NET 5.ShadMOrdre wrote: ↑Sat Jun 25, 2022 17:50You can bet that an AI that scrubs the web for code is only gathering openly published code, regardless of license, but of course including most if not all "official" open source, and no proprietary code whatsoever.

You can bet that this AI does NOT have access to Windows or iOS code, but does have access to Linux code.

You can bet that if a corporation can make money, it will.

And you can bet that their attorneys can most likely outspend you.

Chen, Tworek, Jun et al 2021 wrote: We find that, to the extent the generated code appears identical to the training data, it is due to the predictive weightings in the model rather than retention and copying of specific code.

This is mostly quite alarmist.ShadMOrdre wrote: ↑Sat Jun 25, 2022 17:50This is not good. On any level. Attribution and licensing are only the superficial issues involved here. Minetest mods notwithstanding, can it generated backdoor/worm/virus code, in assembly? I'd bet it can, given all the open source code available on github alone, much less sourceforge, gitlab, and any other public repository.

Rubenwardy only asked very tailored and specific questions of this AI, and the AI generated relevant working code. What happens when the questions become less ethical, or outright criminal?

There goes any career in computer programming...

Don't say we weren't warned of the dangers.

Users browsing this forum: No registered users and 1 guest